Tech

Artificial Intelligence and Virtual Terrorism

Artificial Intelligence (AI) broadly refers to the study and creation of information systems capable of performing tasks that resemble human problem-solving capabilities, using computer algorithms to do things that would normally require human intelligence — such as speech recognition, visual perception, and decision-making. Computers and software are naturally not self-aware, emotional, or intelligent the way human beings are. They are, rather, tools that carry out functionalities encoded in them, which are inherited from the intelligence of their human programmers.

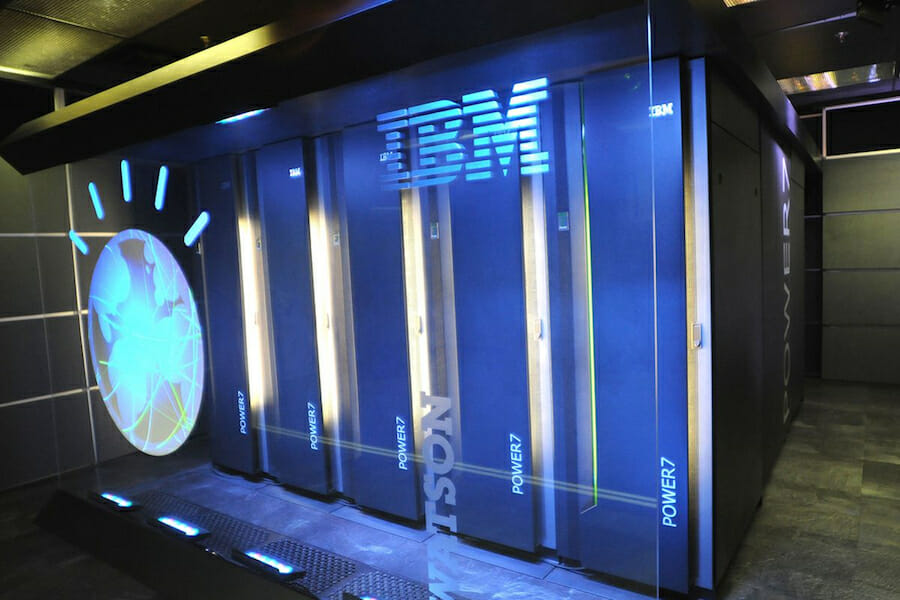

IBM has applied AI to security in the form of its Watson “cognitive computing” platform. The company has taught Watson to read through vast quantities of security research, with some 60,000 security-related blog posts published every month and 10,000 reports being produced each year. The company has scores of customers using Watson as part of their security intelligence and analytics platform.

Some scientists who work in the AI field believe that AI robots will be living and working with us within the next decade. Autonomous animated avatars are already being created, such as “Baby X,” a virtual infant that learns through experience and can “feel” emotions. Adult avatars can be plugged into existing platforms like Watson, essentially putting a face on a chatbot. Within a decade, humans may well be interacting with lifelike emotionally responsive AI robots, very similar to the premise of the HBO series Westworld and the film I, Robot. However, before that becomes a reality robotics will need to catch up to AI technology.

As Stephen Hawking noted in 2014, “Whereas the short-term impact of AI depends on who controls it, the long-term impact depends on whether it can be controlled at all.” He believes we are on the cusp of the kinds of AI that were previously exclusive to science fiction films. In 2014 Google purchased Deep Mind Technologies (an AI company) and in 2016 it picked up Robotics maker Boston Dynamics. The amount of money that Google and other commercial companies will continue to pour into robotics and AI could create a world in which smart robots could indeed roam our streets, I, Robot style.

Apple created a paradigm shift with the launch of the iPhone in 2007. Ten years later, it is estimated that 44 percent of the world’s population owned a smartphone — in 2017 they were as natural a part of our lives as ordinary cell phones were in 2006. We quickly adapt to change. Yet, as rapidly as the technology (and our comfort levels with it) have evolved, in 2027 it may not be hard to imagine that the suite of apps and services that today revolve around the typical smartphone will have migrated to other, even more convenient and capable smart devices, giving us “smart bodies,” with devices and sensors residing on our wrists, in our ears, on our faces, and perhaps elsewhere on our bodies.

All this comes at a price. If we want our lives to be enhanced by AI, we will have to submit to constant surveillance — by our devices and by the tech giants themselves. In many respects, we already have. Billions of people who use ‘smart’ devices already agree to these companies Terms of Service without reading the contents, which has already given these firms total control of the data and images we stream to them. These companies will of course swear they will encrypt our data and keep it confidential, but we are required to hand them the keys to our lives in the process.

This coming generation of malware, which inevitably becomes part of any Internet-based ecosystem, will be situation-aware, meaning that it will understand the environment it is in and make calculated decisions about what to do next, behaving like a human attacker: performing reconnaissance, identifying targets, choosing methods of attack, and intelligently evading detection. This next generation of malware uses code that is a precursor to AI, replacing traditional “if not this, then that” code logic with more complex decision-making trees. Autonomous malware operates much like branch prediction technology, designed to guess which branch of a decision tree a transaction will take before it is executed. A branch predictor keeps track of whether or not a branch is taken, so when it encounters a conditional action that it has seen before, it makes a prediction so that over time, the software becomes more efficient.

Autonomous malware is guided by the collection and analysis of ‘offensive intelligence,’ such as types of devices deployed in a network to segment traffic flow, applications being used, transaction details, or time of day transactions occur. The longer a threat can persist inside a host, it becomes more adept at operating independently, blending into its environment, selecting tools based on the platform it is targeting and, eventually, taking counter-measures based on the security tools in place.

AI and machine learning are becoming significant allies in cybersecurity. Machine learning is being bolstered by predictive applications to help safeguard networks. New security measures and countermeasures can also be provisioned or updated automatically as new devices, workloads, and services that can be deployed or moved anywhere in a network — from endpoints to the cloud. Tightly integrated and automated security enables a comprehensive threat response far greater than the sum of the individual security solutions protecting the network, which is part of the promise and the peril of AI.

Cyberspace is the ultimate 3D chessboard, with layers upon layers of moves and vulnerabilities, creating exponential threats that traditional thinking is simply not equipped to handle. Albert Einstein famously said, “We cannot solve our problems with the same level of thinking that created them.” The use of AI allows us to take our thinking on cybersecurity to that next level and identify more advanced problems. Cognitive technology gives us new insights into current and future threats, allowing greater speed and precision in our response. The question is whether we will embrace the battle against Virtual Terrorism with the same degree of vigor as we do other forms of problem solving.